Shopping Sites

Global Online Shopping Sites

Shop nowLatest Event

Latest News

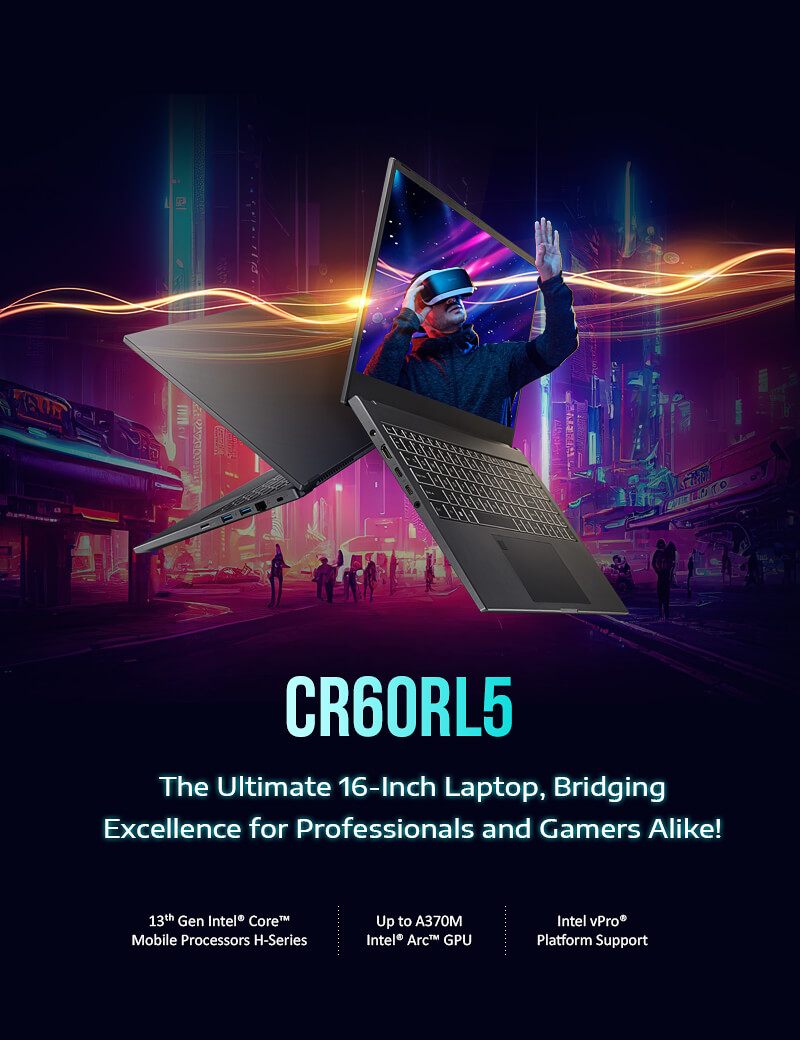

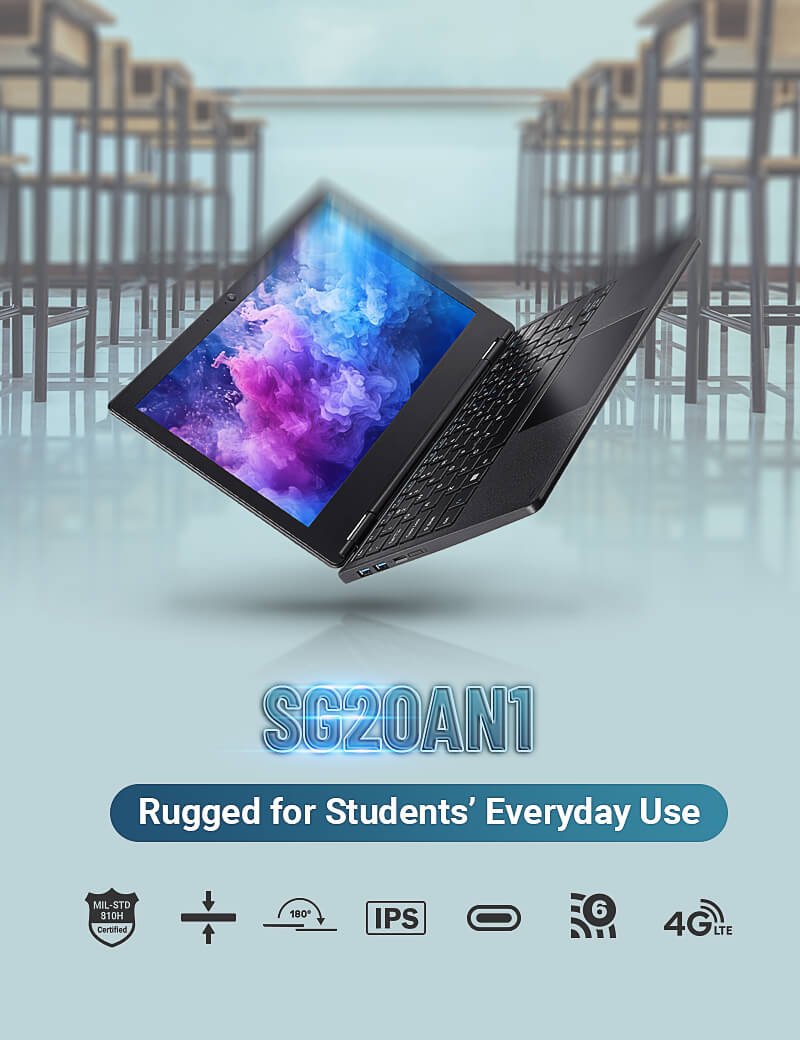

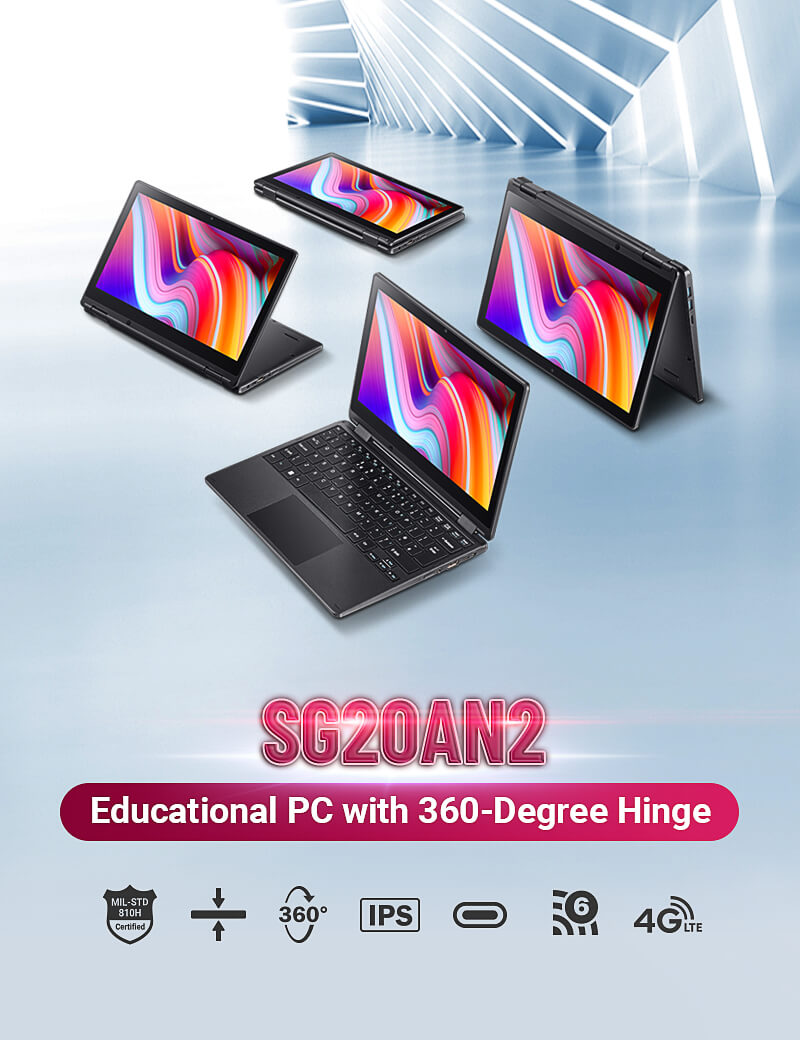

ECS Showcasing Latest Laptops at 2024 Hong Kong Global Sources Electronics Show

2024/04/02

Event News

ECSIPC Introduces LIVA Z5 Series Mini PCs for Industrial Applications

2024/03/14

Event News